Member-only story

Building an End-to-End Retrieval-Augmented Generation (RAG) Chatbot with Databricks

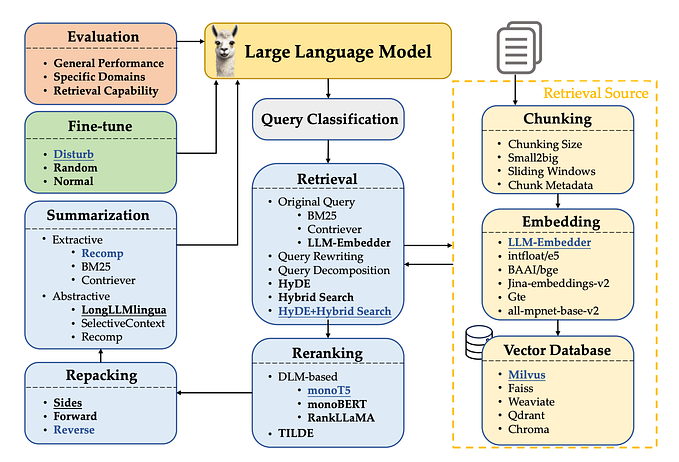

The goal of this article is to leverage recent tools and techniques to implement Retrieval-Augmented Generation (RAG) in Databricks.

We’ll explore how to utilize Databricks’ serving endpoints for LLMs, different chunking strategies, open-source vector stores vs. Databricks Vector Index, and how to maintain chat history.

High level steps which are involved here are:

- Data Preparation: Collect and preprocess text data, removing redundancy and performing exploratory data analysis (EDA).

- Chunking and Embeddings: Split the text into manageable chunks, create embeddings, and filter out less informative chunks.

- Implementing RAG: Develop a question-answering system using an LLM, write prompts, and evaluate results with the RAGAs library.

- Model Deployment and Management: Deploy the model and manage the model using MLflow.

Install libraries

%pip install tiktoken==0.5.2 faiss-cpu==1.7.4 langchain --upgrade mlflow==2.9.2 databricks-genai-inference spacy --upgrade databricks-sdk --upgrade%sh

python -m spacy download en_core_web_smfrom langchain.vectorstores import FAISS

from transformers import AutoTokenizer, AutoModelForQuestionAnswering

from transformers import AutoTokenizer, pipeline

from langchain import HuggingFacePipeline

from langchain.chains…